یادگیری عمیق

دانشگاه فردوسی مشهد

محمود امینطوسی

Deep Learning

Mahmood Amintoosi

پاییز ۱۴۰۲

Source book

Deep Learning with Python,by: FRANÇOIS CHOLLET

https://www.manning.com/books/deep-learning-with-python-second-edition

LiveBook

Github: Jupyter Notebooks

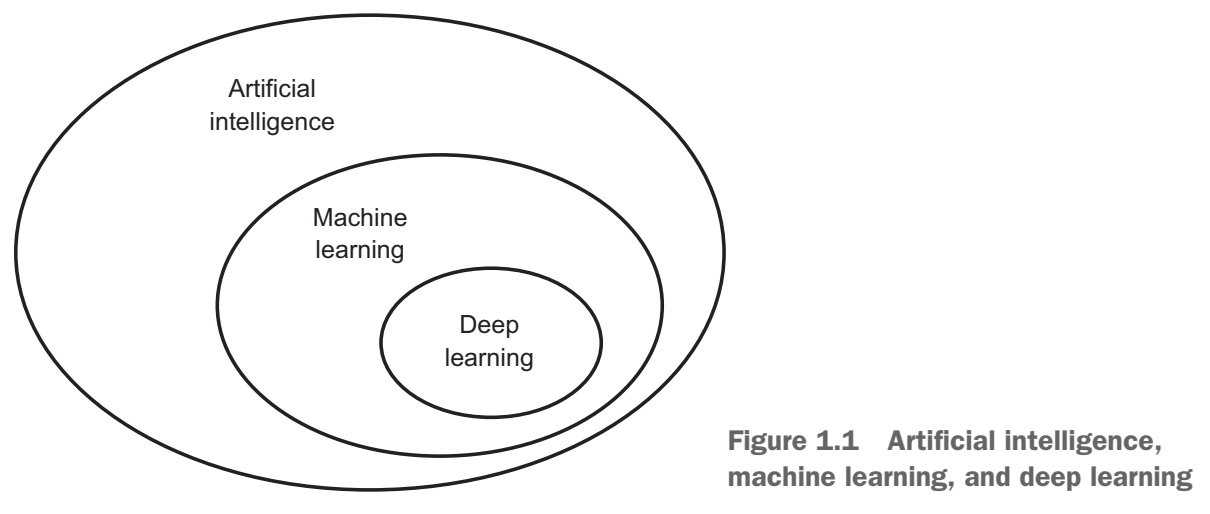

Chapter 1

What is deep learning?

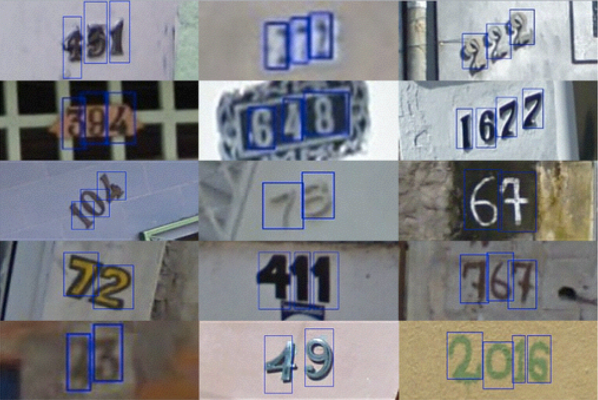

Applications

Google Street-View (and ReCaptchas)

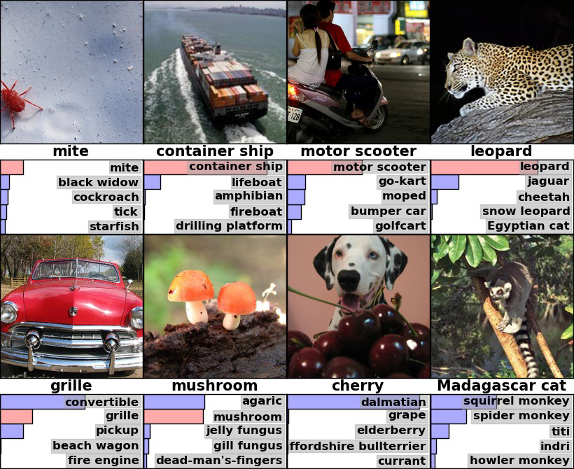

Image Classification

(now better than human level)

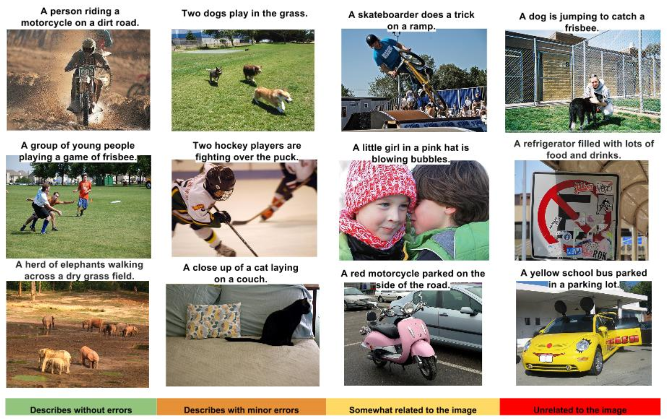

Captioning Images

Some good, some not-so-good

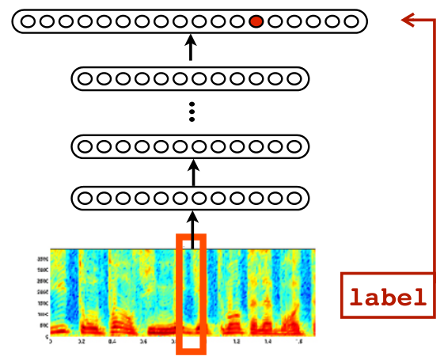

Speech Recognition

Android feature since Jellybean (v4.3, 2012)

Trained in ~5 days on 800 machine cluster

Embedded in phone since Android Lollipop (v5.0, 2014)

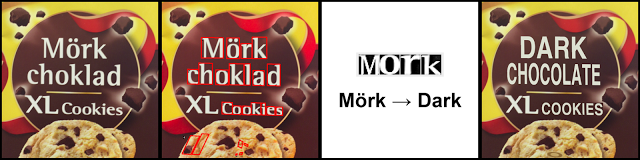

Translation

Google's Deep Models are on the phone

"Use your camera to translate text instantly in 26 languages"

Translations for typed text in 90 languages

Reinforcement Learning

Google DeepMind's AlphaGo

Learn to play Go from (mostly) self-play

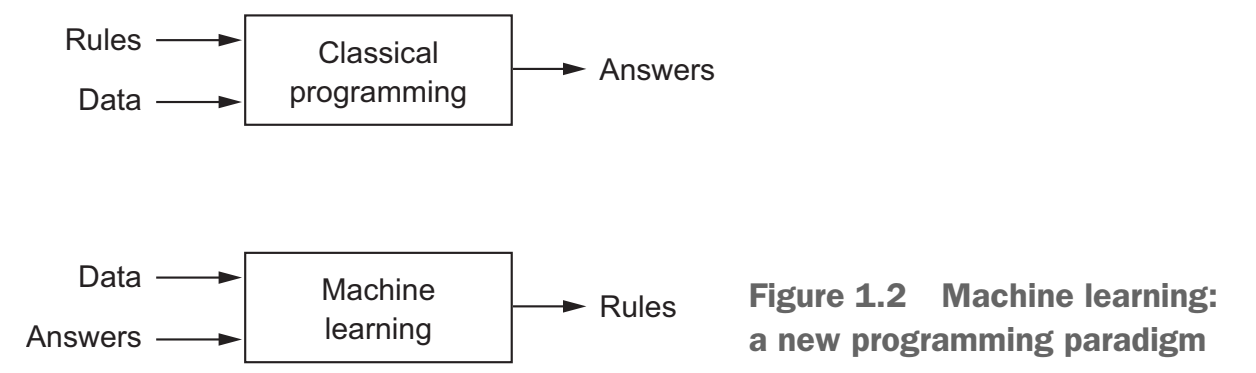

Machine learning vs. Classical programming

Machine learning: a new programming paradigm

Machine learning

- Input data points

- Examples of the expected output

- A way to measure whether the algorithm is doing a good job

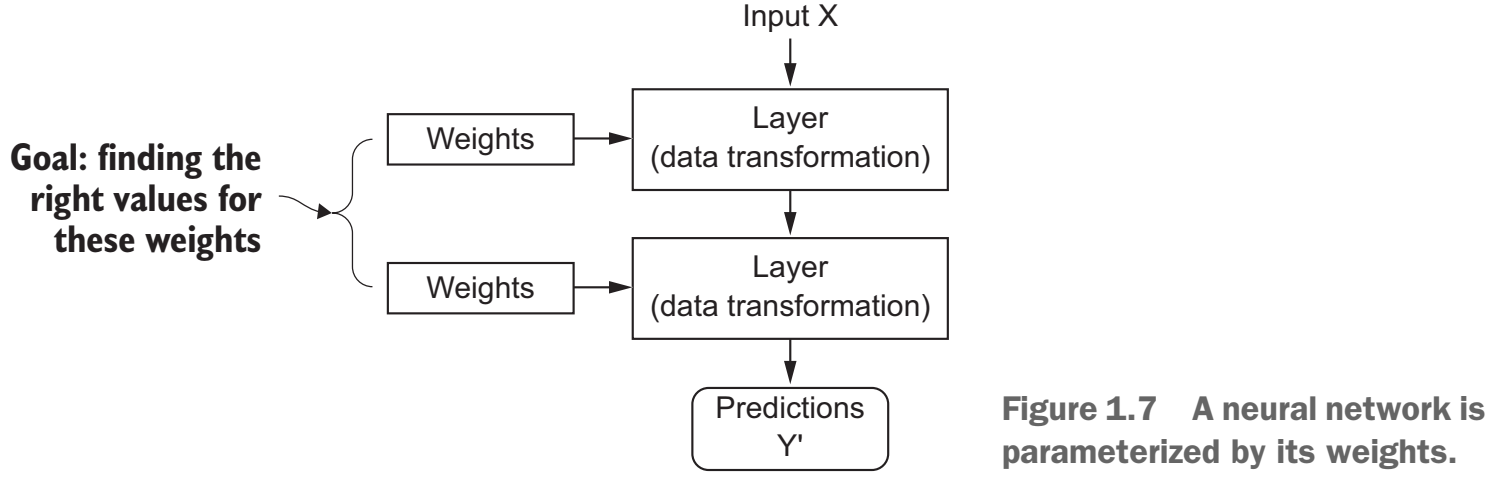

A machine-learning model transforms its input data into meaningful outputs, a process that is “learned” from exposure to known examples of inputs and outputs. Therefore, the central problem in machine learning and deep learning is to meaningfully transform data.

Why Computer Vision is difficult?

How Computer see the above picture?

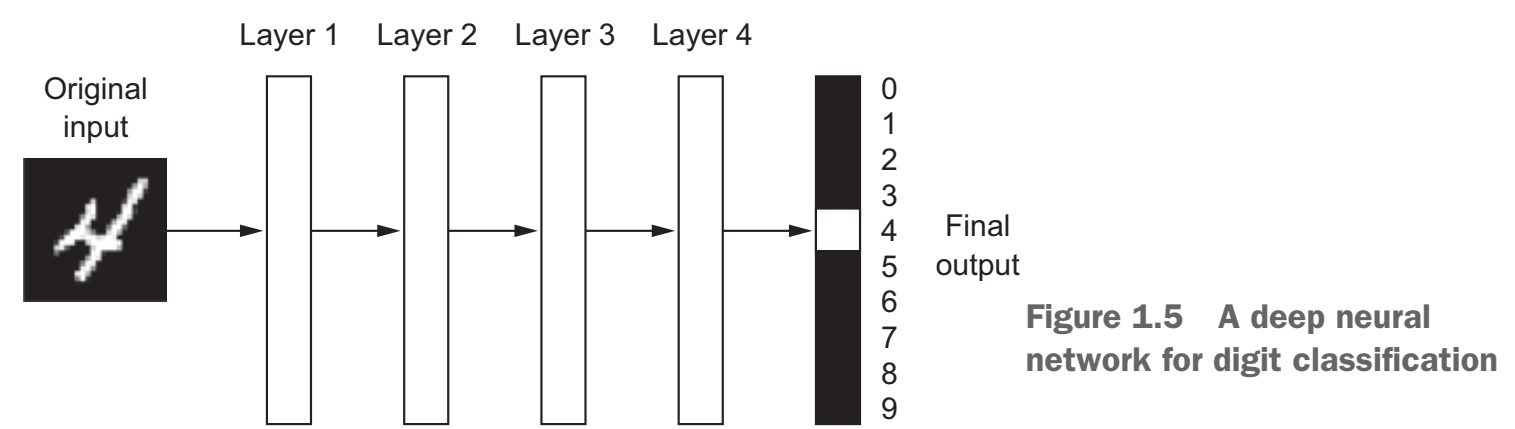

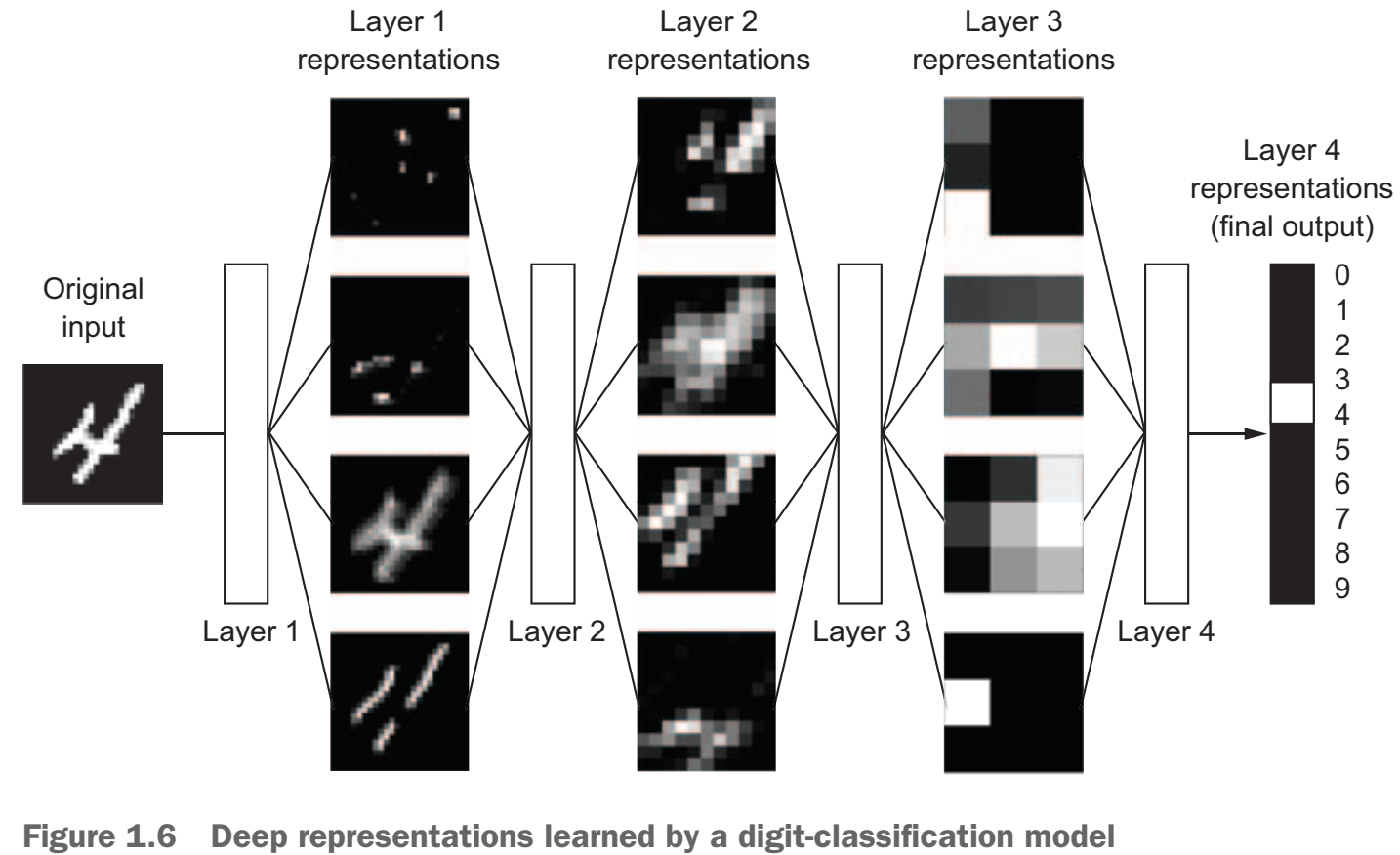

The “deep” in deep learning

- The deep in deep learning isn’t a reference to any kind of deeper understanding achieved by the approach; .

- it stands for this idea of successive layers of representations

- How many layers contribute to a model of the data is called the depth of the model.

Deep Learning Layers

Deep Learning Layers

Neural Networks

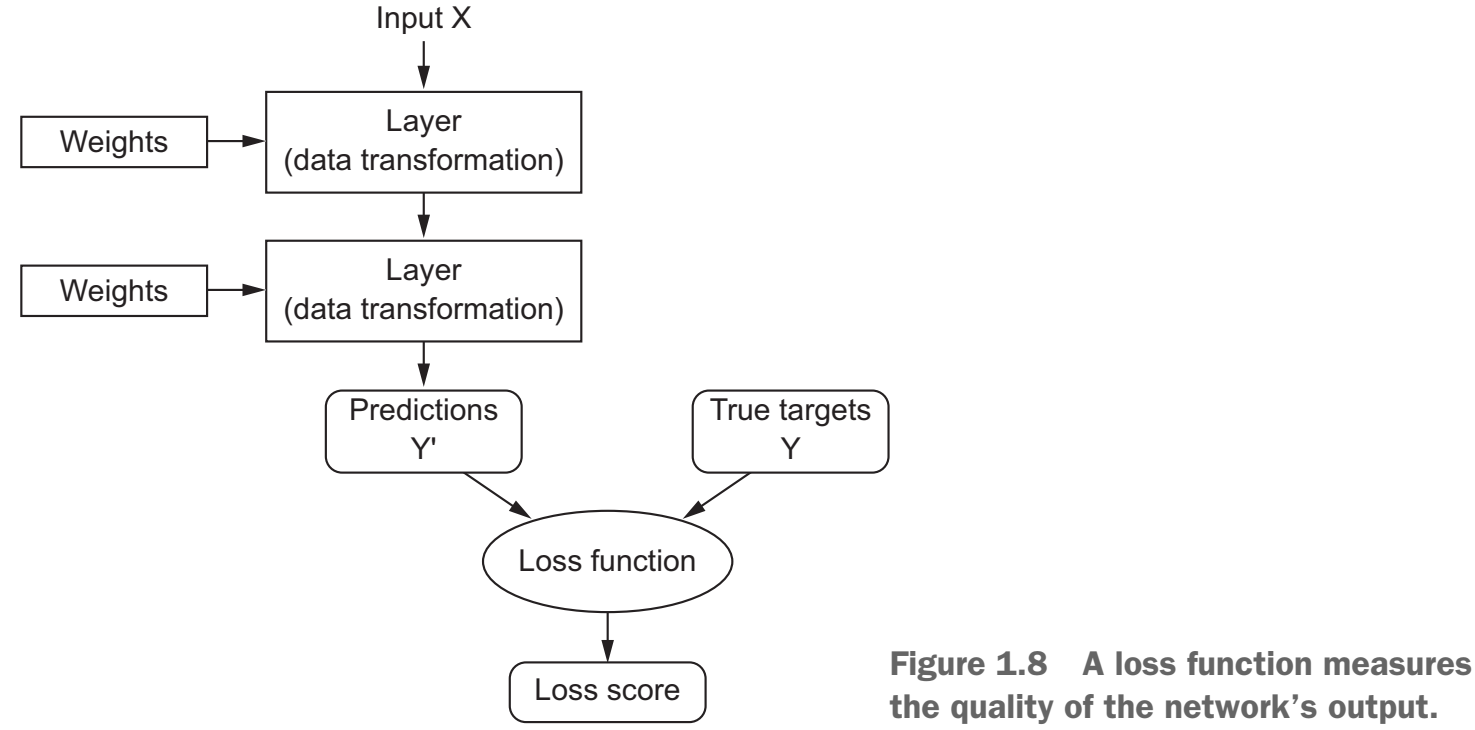

Loss Function

Optimizer

Deep Learning breakthroughs

- Near-human-level image classification

- Near-human-level speech recognition

- Near-human-level handwriting transcription

- Improved machine translation

- Improved text-to-speech conversion

- Digital assistants such as Google Now and Amazon Alexa

- Near-human-level autonomous driving

- Ability to answer natural-language questions

- Ability to answer natural-language questions

Deep Learning

- Neural Networks

- Multiple layers

- Fed with lots of Data

History

- 1980+ : Lots of enthusiasm for NNs

- 1995+ : Disillusionment = A.I. Winter (v2+)

- 2005+ : Stepwise improvement : Depth

- 2010+ : GPU revolution : Data

Who is involved

- Google - Hinton (Toronto)

- Facebook - LeCun (NYC)

- Universities, eg: Montreal (Bengio)

- Baidu - Ng (Stanford)

- ... Apple (acquisitions), etc

Who is involved

| Hinton (Toronto) |  |

|

| LeCun (NYC) |  |

|

| Universities | Bengio (Montreal) |  |

| Baidu | Ng (Stanford) |  |

Andrew Ng:

“AI is the new electricity.”

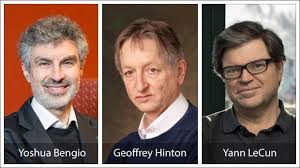

2011, Image Classification

ImageNet challenge was difficult at the time, consisting of classifying highresolution color images into 1,000 different categories after training on 1.4 million images

ImageNet challenge was difficult at the time, consisting of classifying highresolution color images into 1,000 different categories after training on 1.4 million images

Deep Learning started to beat other approaches...

- In 2011, Dan Ciresan from IDSIA began to win academic image-classification competitions with GPU-trained deep neural networks

- In 2011, the top-five accuracy of the winning model, based on classical approaches to computer vision, was only 74.3%.

- In 2012, a team led by Alex Krizhevsky and advised by Geoffrey Hinton was able to achieve a top-five accuracy of 83.6%—a significant breakthrough

- By 2015, the winner reached an accuracy of 96.4%, and the classification task on ImageNet was considered to be a completely solved problem

What makes deep learning different?

It completely automates what used to be the most crucial step in a machine-learning workflow:feature engineering

Why deep learning? Why now?

In general, three technical forces are driving advances:

- Hardware NVIDIA GPUs, Google TPUs

- Datasets and benchmarks Flickr, YouTube videos and Wikipedia

- Algorithmic advances

- Better activation functions

- Better weight-initialization schemes

- Better optimization schemes

- Questions? -

m.amintoosi @ gmail.com

webpage : http://mamintoosi.ir

webpage in github : http://mamintoosi.github.io

github : mamintoosi